LLM chatbots are supposed to make our lives easier. They’re designed to handle customer support, answer questions, and even try to make us laugh (poorly). But let’s be real — most of the time, they’re just frustrating. Developers amazed by the affordances of LLMs sometimes forget that there are real people using their applications that have no idea what “Prompt Engineering” is.

If you haven’t read The Design of Everyday Things, you should. Not just because it’s a classic, but because it’s a masterclass in how to create products that don’t make people want to rip their eyes out. And let’s be honest, as developers, we’re often guilty of focusing too much on the shiny bits of tech and not enough on making things that are, well, usable.

The Curse of Knowledge

Engineers working on AI applications are incredibly intelligent people. They know about things like machine learning, natural language processing, and all that other technical jazz that makes chatbots go brrrr. The problem arises when the developers of these applications become so lost in the weeds of how powerful LLMs can be, they forget that they need guide their users through how to effectively use the amazing tool they’re building.

This is what Norman calls the “curse of knowledge.” Developers assume users understand the inner workings of AI, or at least enough to know what to ask and how to ask it. Spoiler alert: they don’t. Most people just want their questions answered without jumping through hoops.

So, instead of making users feel like they’re talking to an AI expert, developers should focus on making the experience as simple and intuitive as possible. Think less about showing off the AI’s capabilities and more about actually solving the user’s problem.

Affordances and Signifiers

Norman talks a lot about affordances and signifiers — basically, making sure that things work the way they look like they should. This is crucial in chatbot design. If a user asks a question, they should get an answer, not a confusing error message or a response that feels like it came from another planet.

Too often, chatbots give vague responses or redirect users in a way that makes no sense. “I’m sorry, I didn’t understand that” is the chatbot equivalent of a door handle that looks like you should pull it, but actually needs to be pushed. It’s frustrating and makes users want to give up.

Chatbots need to clearly signal what they can and cannot do. If the bot can’t answer a specific type of question, it should gracefully handle the situation, maybe by offering suggestions for how to phrase the question differently, or by escalating to a human agent when necessary. The key is to make the user feel guided, not lost.

Error Messages: We Can Do Better

We’ve all had that experience where we ask a chatbot something simple, and it responds with an error or a completely irrelevant answer. This is where Norman’s lessons really come into play. An error message in a chatbot should be as clear as day, telling the user what went wrong and how to fix it.

Instead, what do we usually get? “I’m not sure how to help with that.” Great, thanks for nothing. Chatbots need to be better at recognizing when they’re out of their depth and then providing useful next steps. Whether it’s rephrasing the question, offering related information, or handing off to a human, the bot should never leave the user hanging.

Simplicity Isn’t Stupid - It’s Essential

Developers love to show off what their AI can do, but sometimes, less is more. Norman would argue that simplicity is key, especially in chatbot design. The more complex and feature-heavy a chatbot is, the more likely it is to confuse users.

A good chatbot doesn’t need to do everything; it just needs to do a few things really well. Strip away the unnecessary features and focus on the core tasks users need help with. A chatbot that’s a jack-of-all-trades is often a master of none.

Applications Should Be Human-First

Chatbots are likely here to stay, but if we want them to be truly useful, developers need to focus less on the tech and more on the user experience. Don Norman’s The Design of Everyday Things offers a roadmap for making technology that people actually enjoy using.

The next time you’re designing a chatbot, ask yourself: is this bot making life easier for the user, or is it just adding another layer of frustration? Make it simple, make it clear, and above all, make it human.

Because if your chatbot can’t do that, no one’s going to care how advanced your AI is.

They’ll just stop using it.

So what should you do?

No matter what you’re building, do your best to make it as intuitive as possible for users of any background. Designs are like jokes; if they have to be explained, they probably aren’t very good.

Regarding chatbots in general, I personally feel like they are being over used by companies to the point where they have lost a lot of their utility. The same (or very similar) interface is presented to users with a severe lack of signifiers to guide the user through it’s intended purpose.

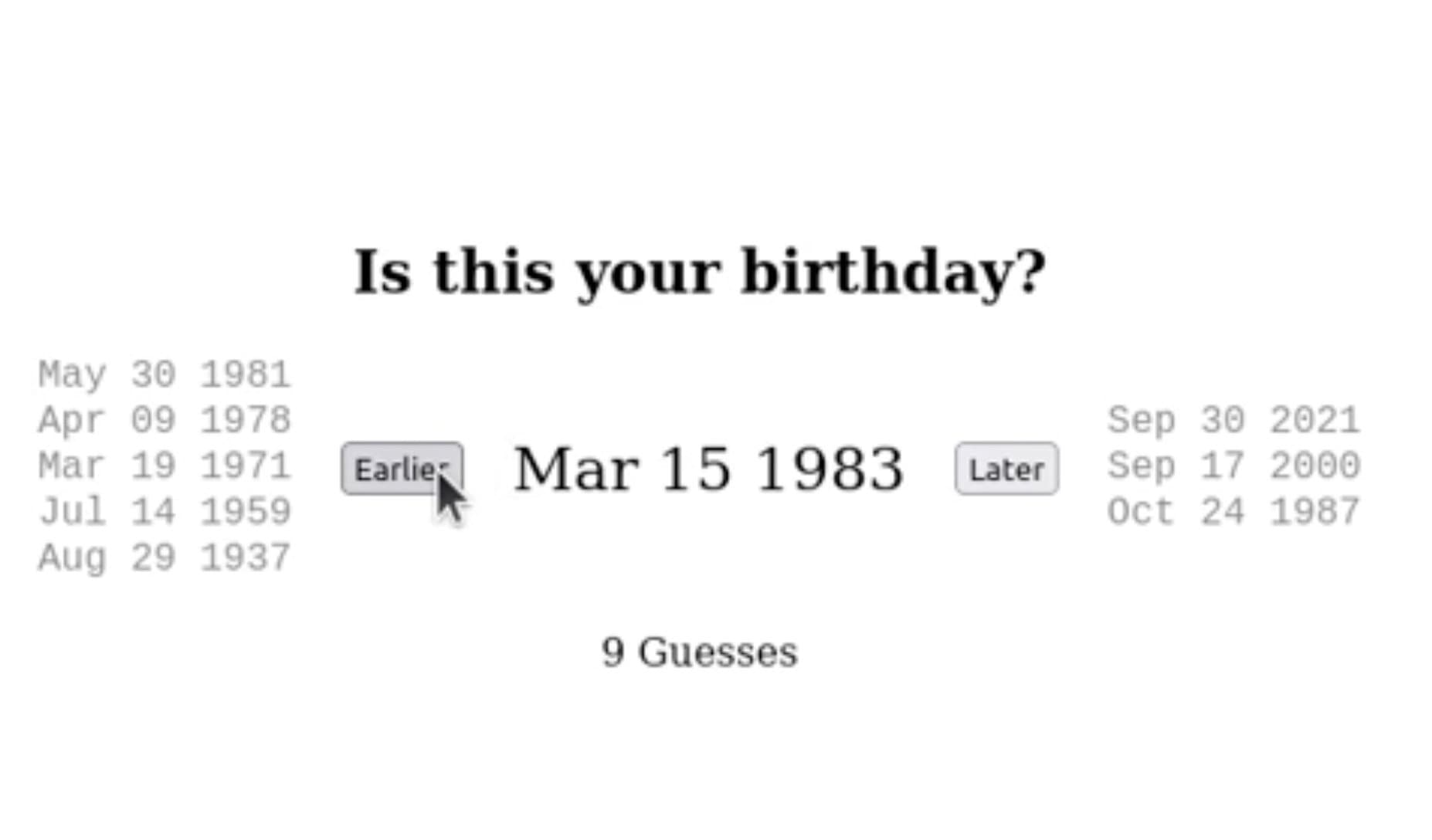

While our team was building adtwin, we made it a point to guide users through using our ad script generation tool without having to negotiate a prompt. We ask them questions about their business, the product they want to advertise, and hold their hand through the entirety of the ad generation process. We want users to experience the magic of using an LLM for generating ad copy without it feeling like every other chatbot available right now.

It’s a delightful experience, and I would encourage any product development team to take the same approach.